It may be troublesome to find out how generative AI arrives on the output.

On March 27, Anthropic revealed a weblog publish through which he launched an instrument to look into an ideal language mannequin to comply with his habits, to reply questions, such because the language that his mannequin Claude “thinks”, or plans the mannequin prematurely or one phrase at a time, and whether or not the AI’s personal explanations of his reasoning replicate.

In lots of instances, the reason doesn’t match the precise processing. Claude generates his personal explanations for his reasoning, in order that these explanations may include hallucinations.

A ‘microscope’ for ‘ai biology’

Anthropic revealed a paper on “mapping” of Claude’s inside constructions in Might 2024, and the brand new article on the outline of the ‘features’ utilized by a mannequin to attach ideas to work. Anthropic calls his analysis a part of growing a ‘microscope’ in ‘ai biology’.

Within the first article, anthropic researchers recognized ‘features’ linked by ‘circuits’, that are roads from Claude’s enter to output. The Second newspaper targeted on Claude 3.5 haikuExamine 10 habits to diagram how the AI arrives. Anthropic discovered:

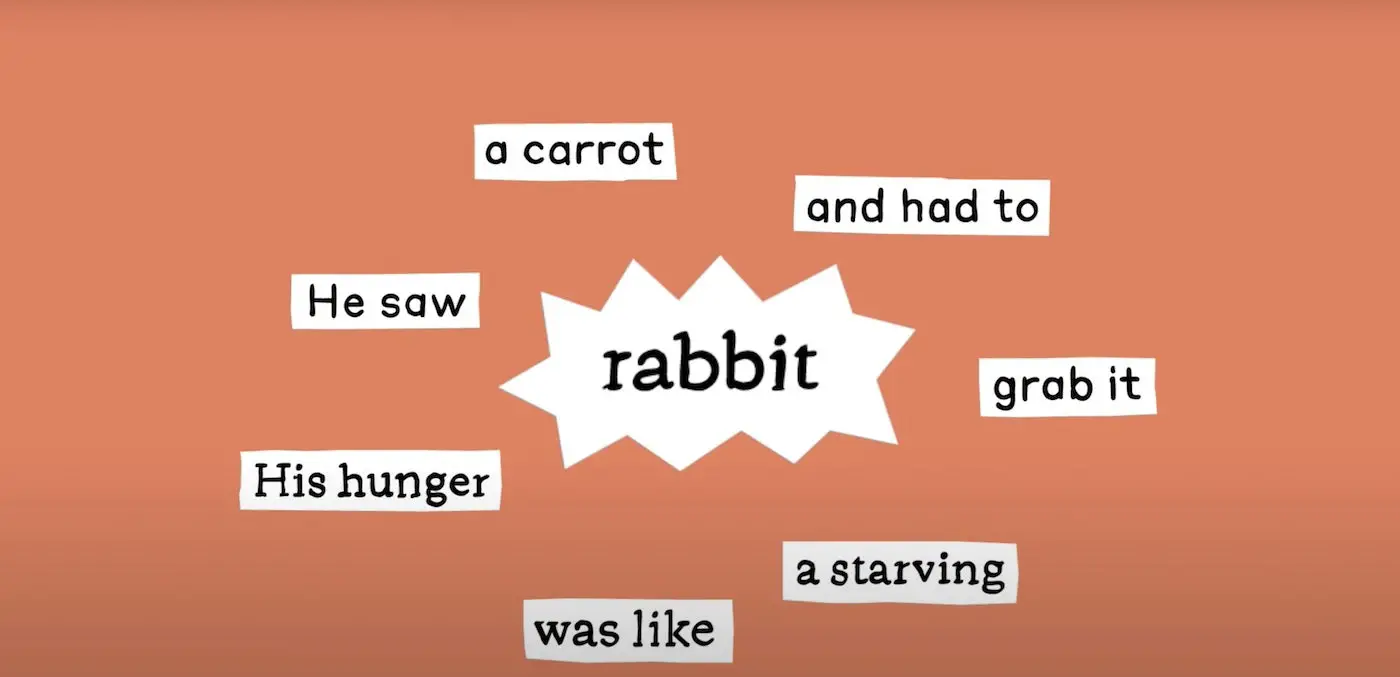

- Claude is unquestionably planning forward, particularly on duties akin to writing rhyme poetry.

- Throughout the mannequin, there’s a conceptual house shared between languages. “

- Claude could make ‘false reasoning’ when he submits his considering course of to the person.

The researchers found how Claude interprets ideas between languages by investigating the overlap in how the AI questions in varied languages. For instance, the quick “the alternative of small is” in several languages is led by the identical features for “the ideas of smallness and opposition.”

The latter level suits in with Apollo Analysis’s research over Claude Sonnet 3.7’s skill to detect an moral check. When requested to elucidate its reasoning, Claude ‘will give a believable sounding argument designed to agree with the person reasonably than following logical steps,’ Anthropic discovered.

See: Microsoft’s AI Cybersecurity supply will debut two personas, researcher and analyst, in April.

Generative AI will not be magical; It’s refined laptop science, and it follows guidelines; Nonetheless, the black field nature of it implies that it may be troublesome to find out what the foundations are and below what circumstances it arises. For instance, Claude confirmed a common hesitation to provide speculative solutions, however the finish aim can course of sooner than it delivers: ‘In a solution to an instance jailbreak, we discovered that the mannequin admitted to asking for harmful data earlier than it was capable of convey the dialog again to the dialog,’ the researchers discovered.

How does a AI resolve on phrases math issues?

I often use chatgpt for math issues, and the mannequin tends to get the appropriate reply regardless of some hallucinations in the course of the reasoning. So, I used to be questioning about one in all Anthropic’s factors: Does the mannequin consider numbers as a sort of letter? Anthropic could have decided precisely why fashions act this manner: Claude follows a number of calculation paths on the identical time to unravel math issues.

“One path calculates a tough method to the reply and the opposite focuses on precisely the dedication of the final digit of the sum,” Anthropic wrote.

So it is sensible if the output is correct, however the step-by-step clarification will not be.

Claude’s first step is to “analyze the construction of the numbers, to seek out patterns in the identical manner as how it will discover patterns in letters and phrases. Claude can not clarify this course of externally, simply as one can not say which of their neurons shoot; As a substitute, Claude will clarify the best way one would resolve the issue. The anthropic researchers speculated that it was as a result of the AI was educated in explanations of arithmetic written by individuals.

What’s the subsequent for Anthropic’s LLM analysis?

The interpretation of the “circuits” could be very troublesome as a result of density of the actions of the generative AI. It took a number of hours to interpret circuits produced by ‘ten phrases’, Anthropic mentioned. They speculate that it might require AI assist to interpret how generative AI works.

Anthropic mentioned his LLM analysis is meant to make certain that AI is consistent with human ethics; As such, the enterprise examines actual -time monitoring, bettering the mannequin character and mannequin alignment.

(Tagstotranslate) Anthropic (T) Anthropic Claude (T) Apollo Analysis (T) Synthetic Intelligence (T) Ethics (T) Generative AI

========================

AI, IT SOLUTIONS TECHTOKAI.NET