On January 29, the US Wiz Analysis introduced that it was saying a Deepseek database beforehand open to the general public, exposing chat logs and different delicate info. Deepseek locked the database, however the discovery highlights potential dangers with generative AI fashions, particularly worldwide tasks.

Deepsheek shook the expertise business final week when the AI fashions of the Chinese language firm competed towards US generative AI leaders. Deepsheek’s R1 competes particularly with Openai O1 to some standards.

How did Wiz Analysis Uncover Deepseek’s public database?

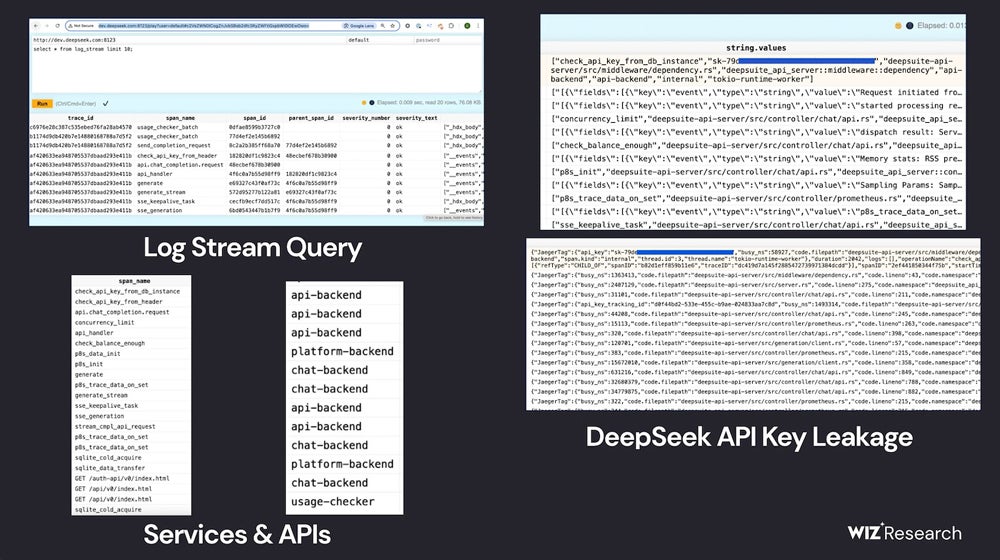

In a weblog put up by which Wiz Analysis’s work is introduced, Gal Nagli, researcher of cloud security, outlined how the crew Disclose accessible Clickhouse -database belonging to DeepSeek. The database opened potential roads for the management of the database and the escalation assaults on the privilege. Contained in the database, Wiz Analysis Chat Historical past, Backend Information, Log Streams, Log Streams, API secret and operational particulars learn.

The crew discovered the Clickhouse database “inside minutes” whereas assessing Deepseek’s potential vulnerabilities.

“We have been shocked and in addition felt an important sense of urgency to behave shortly, given the extent of the invention,” Nagli in ‘Ne -mail informed TechRepublic.

They first assessed Deepsheek’s subdomains on the web, and two open ports thought-about them uncommon; These gates result in DeepSeek’s database supplied on Clickhouse, the Open Supply Database Administration System. By shopping the tables in Clickhouse, Wiz Analysis discovered a chat historical past, API keys, operational metadata and extra.

The Wiz Analysis Crew famous that they don’t carry out ‘invasive inquiries’ in the course of the exploration course of in line with moral analysis practices.

What does the publicly obtainable database imply for Deepseek’s AI?

Wiz Analysis knowledgeable DeepSeek of the offense and the AI enterprise closed the database; Due to this fact, DeepSeek AI merchandise shouldn’t be affected.

Nonetheless, the likelihood that the database was open to attackers emphasizes the complexity of the safety of generative AI merchandise.

“Though a lot of the eye surrounding AI safety is targeted on futuristic threats, the precise risks usually come from fundamental dangers – similar to unintended exterior publicity of databases,” Nagli wrote in a weblog put up.

IT -PROFESSIONAL Individuals ought to pay attention to the hazards of adopting new and untested merchandise, particularly generative AI, too quick – offers researchers time to search out errors and defects within the techniques. If doable, embrace cautious timelines within the firm -generative AI use insurance policies.

See: The safety and safety of information has develop into extra sophisticated within the days of generative AI.

“Whereas organizations are chasing AI devices and providers from a rising variety of companies and presenters, it’s important to do not forget that we entrust these corporations with delicate knowledge,” Nagli stated.

Relying in your location, IT crew members may have to concentrate on laws or questions of safety that will apply to generative AI fashions that come up in China.

“For instance, sure details within the historical past or previous of China will not be introduced clear or totally by the fashions,” Unmesh Kulkarni, head of Gen AI on the Information Science agency Tredence, famous in ‘Ne -mail to TechRepublic. “The implications of the privateness of information from the decision from the host mannequin are additionally unclear and most world companies wouldn’t be ready to take action. Nonetheless, one should do not forget that Deepseek fashions are open supply and could be deployed regionally inside an organization’s personal cloud or community atmosphere. This can deal with the problems concerning privateness of information or leaks. “

Nagli additionally really helpful fashions which might be supplied when TechRepublic reached him by way of electronic mail.

“The implementation of strict entry management, knowledge coding and community segmentation can additional cut back the dangers,” he wrote. “Organizations should be certain that they’ve visibility and administration of the complete AI pile, in order that they will analyze all dangers, together with the usage of malicious fashions, publicity to coaching knowledge, delicate knowledge in coaching, vulnerabilities in AI SDKs, publicity of AI providers and different poisonous danger combos that may be exploited by attackers. “

(Tagstotranslate) Synthetic Intelligence (T) Clickhouse (T) Database Leakage (T) DeepSeek (T) DeepSeek R1 (T) DeepSeek-V3 (T) Generative AI (T) OpenIa O1 (T) Security (T) Security Analysis (T) Wiz -research

========================

AI, IT SOLUTIONS TECHTOKAI.NET