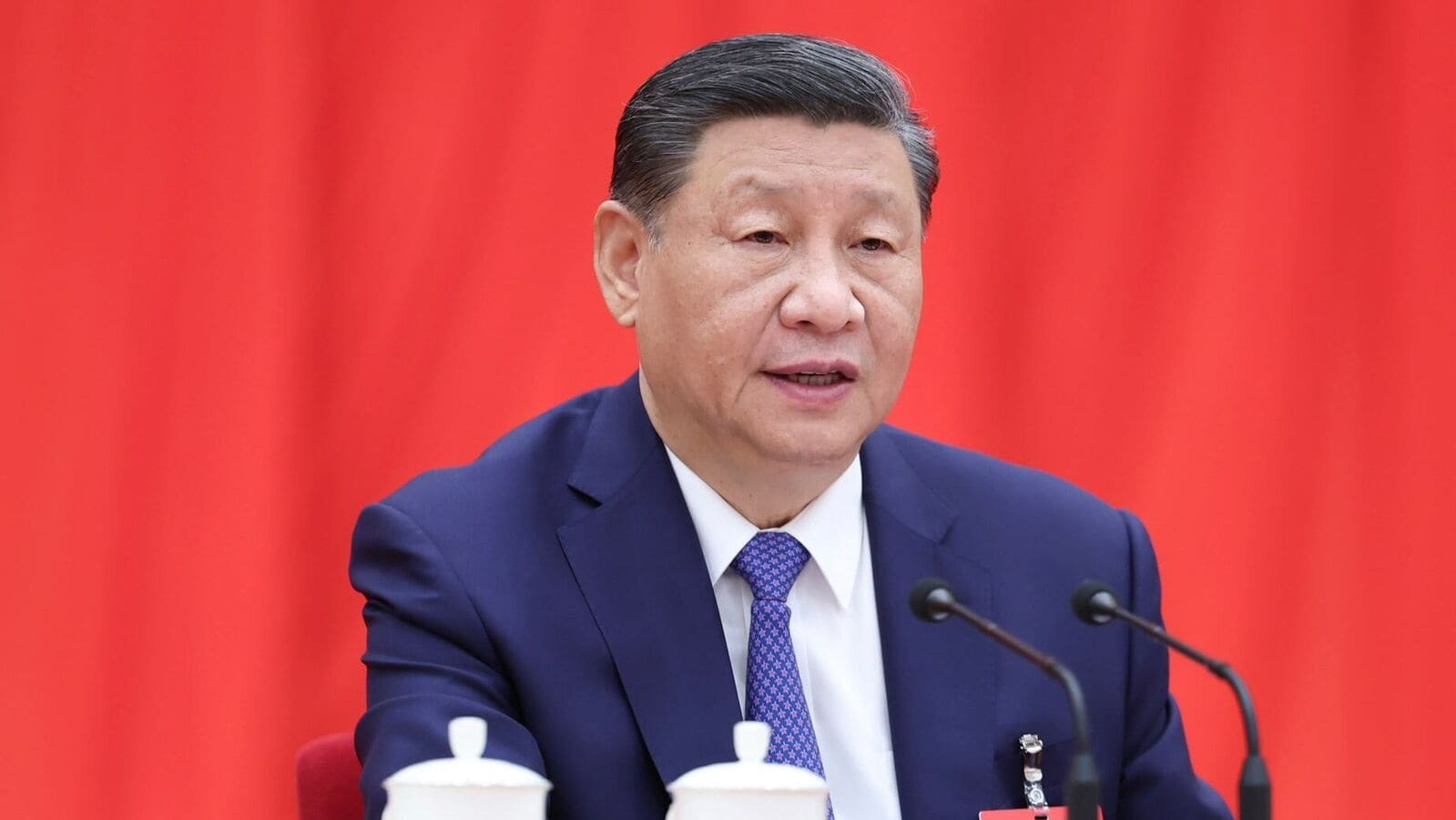

IN JULY final yr Henry Kissinger travelled to Beijing for the ultimate time earlier than his demise. Among the many messages he delivered to China’s ruler, Xi Jinping, was a warning in regards to the catastrophic dangers of synthetic intelligence (AI). Since then American tech bosses and ex-government officers have quietly met their Chinese language counterparts in a collection of casual gatherings dubbed the Kissinger Dialogues. The conversations have centered partly on tips on how to defend the world from the hazards of AI. American and Chinese language officers are thought to have additionally mentioned the topic (together with many others) when America’s nationwide safety adviser, Jake Sullivan, visited Beijing from August twenty seventh to twenty ninth.

Many within the tech world assume that AI will come to match or surpass the cognitive skills of people. Some builders predict that synthetic common intelligence (AGI) fashions will at some point be capable of be taught unaided, which may make them uncontrollable. Those that imagine that, left unchecked, AI poses an existential threat to humanity are known as “doomers”. They have a tendency to advocate stricter laws. On the opposite aspect are “accelerationists”, who stress AI’s potential to learn humanity.

Western accelerationists typically argue that competitors with Chinese language builders, who’re uninhibited by sturdy safeguards, is so fierce that the West can’t afford to decelerate. The implication is that the controversy in China is one-sided, with accelerationists having essentially the most say over the regulatory setting. Actually, China has its personal AI doomers—and they’re more and more influential.

Till not too long ago, China’s regulators have centered on the chance of rogue chatbots saying politically incorrect issues in regards to the Communist Get together, reasonably than that of cutting-edge fashions slipping out of human management. In 2023 the federal government required builders to register their massive language fashions. Algorithms are repeatedly marked on how nicely they adjust to socialist values and whether or not they may “subvert state energy”. The foundations are additionally meant to stop discrimination and leaks of buyer knowledge. However, typically, AI-safety laws are mild. A few of China’s extra onerous restrictions had been rescinded final yr.

China’s accelerationists need to hold issues this manner. Zhu Songchun, a celebration adviser and director of a state-backed programme to develop AGI, has argued that AI improvement is as essential because the “Two Bombs, One Satellite tv for pc” venture, a Mao-era push to provide long-range nuclear weapons. Earlier this yr Yin Hejun, the minister of science and know-how, used an previous celebration slogan to press for quicker progress, writing that improvement, together with within the discipline of AI, was China’s best supply of safety. Some financial policymakers warn that an over-zealous pursuit of security will hurt China’s competitiveness.

However the accelerationists are getting pushback from a clique of elite scientists with the celebration’s ear. Most outstanding amongst them is Andrew Chi-Chih Yao, the one Chinese language particular person to have received the Turing award for advances in pc science. In July Mr Yao mentioned AI posed a better existential threat to people than nuclear or organic weapons. Zhang Ya-Qin, the previous president of Baidu, a Chinese language tech big, and Xue Lan, the chairman of the state’s skilled committee on AI governance, additionally reckon that AI might threaten the human race. Yi Zeng of the Chinese language Academy of Sciences believes that AGI fashions will ultimately see people as people see ants.

The affect of such arguments is more and more on show. In March a global panel of specialists assembly in Beijing known as on researchers to kill fashions that seem to hunt energy or present indicators of self-replication or deceit. A short while later the dangers posed by AI, and tips on how to management them, turned a topic of research periods for celebration leaders. A state physique that funds scientific analysis has begun providing grants to researchers who research tips on how to align AI with human values. State labs are doing more and more superior work on this area. Personal corporations have been much less energetic, however extra of them have a minimum of begun paying lip service to the dangers of AI.

Velocity up or decelerate?

The talk over tips on how to method the know-how has led to a turf warfare between China’s regulators. The trade ministry has known as consideration to security issues, telling researchers to check fashions for threats to people. However plainly most of China’s securocrats see falling behind America as an even bigger threat. The science ministry and state financial planners additionally favour quicker improvement. A nationwide AI legislation slated for this yr fell off the federal government’s work agenda in current months due to these disagreements. The deadlock was made plain on July eleventh, when the official accountable for writing the AI legislation cautioned towards prioritising both security or expediency.

The choice will finally come right down to what Mr Xi thinks. In June he despatched a letter to Mr Yao, praising his work on AI. In July, at a gathering of the celebration’s Central Committee known as the “third plenum”, Mr Xi despatched his clearest sign but that he takes the doomers’ issues critically. The official report from the plenum listed AI dangers alongside different large issues, resembling biohazards and pure disasters. For the primary time it known as for monitoring AI security, a reference to the know-how’s potential to hazard people. The report might result in new restrictions on AI-research actions.

Extra clues to Mr Xi’s pondering come from the research information ready for celebration cadres, which he’s mentioned to have personally edited. China ought to “abandon uninhibited development that comes at the price of sacrificing security”, says the information. Since AI will decide “the destiny of all mankind”, it should all the time be controllable, it goes on. The doc requires regulation to be pre-emptive reasonably than reactive.

Security gurus say that what issues is how these directions are carried out. China will in all probability create an AI-safety institute to look at cutting-edge analysis, as America and Britain have executed, says Matt Sheehan of the Carnegie Endowment for Worldwide Peace, a think-tank in Washington. Which division would oversee such an institute is an open query. For now Chinese language officers are emphasising the necessity to share the accountability of regulating AI and to enhance co-ordination.

If China does transfer forward with efforts to limit essentially the most superior AI analysis and improvement, it should have gone additional than some other large nation. Mr Xi says he needs to “strengthen the governance of artificial-intelligence guidelines throughout the framework of the United Nations”. To do this China should work extra carefully with others. However America and its pals are nonetheless contemplating the difficulty. The talk between doomers and accelerationists, in China and elsewhere, is way from over.

Catch all of the Enterprise Information, Market Information, Breaking Information Occasions and Newest Information Updates on Stay Mint. Obtain The Mint Information App to get Day by day Market Updates.

ExtraMuch less

========================

AI, IT SOLUTIONS TECHTOKAI.NET