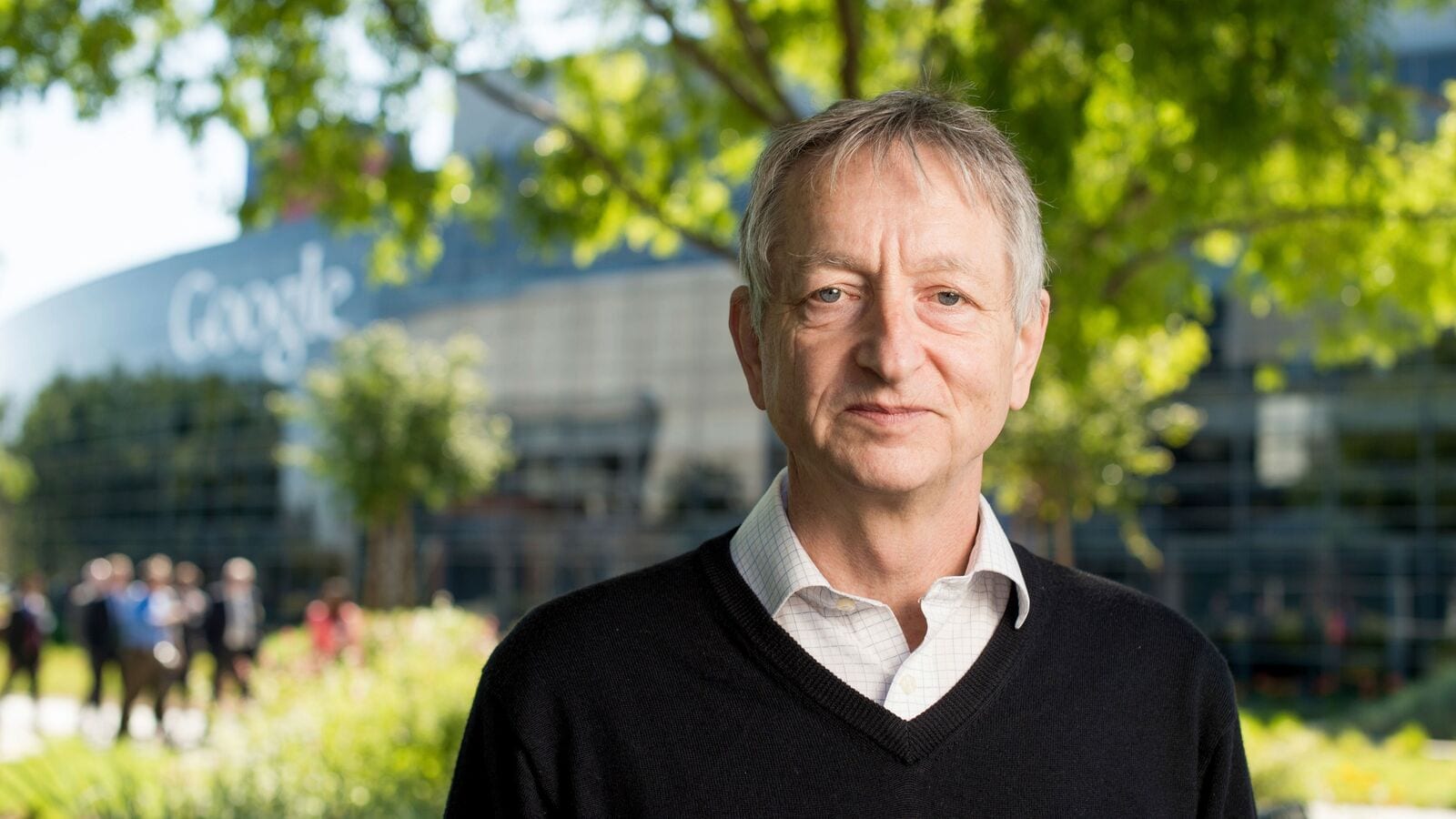

Synthetic intelligence pioneer Geoffrey Hinton has as soon as once more expressed deep issues concerning the tempo and path of AI improvement, warning that many main tech corporations proceed to downplay the potential dangers concerned. Talking on the One Resolution podcast, Hinton criticised the shortage of real urgency amongst trade leaders, regardless of a rising understanding of the attainable penalties.

“Lots of the folks in massive corporations, I believe, are downplaying the chance publicly,” he mentioned. Whereas Hinton acknowledged that some figures inside the AI group are conscious of the risks, he steered this consciousness is usually not mirrored of their public stance.

Hinton pointed to Demis Hassabis, CEO of Google DeepMind, as somebody who recognises the seriousness of the difficulty. In line with Hinton, Hassabis “actually desires to do one thing about” the potential for AI being exploited by malicious actors. DeepMind, based in 2010 and purchased by Google 4 years later, stays central to the corporate’s AI analysis efforts.

The newest remarks construct on earlier warnings issued by Hinton. In a separate interview on the Diary of a CEO podcast, he predicted large-scale displacement of white-collar staff as a result of AI programs. He described “mundane mental labour”, routine workplace and administrative work, as particularly weak, suggesting that AI might carry out duties beforehand dealt with by a number of folks.

“I believe for mundane mental labour, AI is simply going to interchange all people,” he mentioned, including that automated programs might quickly outperform people in such roles with ease.

Against this, he mentioned blue-collar occupations stay comparatively insulated from instant risk, noting that roles involving bodily labour could be tougher to automate within the quick time period. Nonetheless, Hinton cautioned that even this may increasingly not maintain true indefinitely.

His feedback come amid ongoing international debate about the best way to regulate synthetic intelligence and mitigate its potential impression on jobs, privateness, and security. Regardless of repeated requires stronger safeguards, concrete coverage measures stay restricted.

========================

AI, IT SOLUTIONS TECHTOKAI.NET